AIDA fellowships

AIDAs kliniska fellows är personer som är anställda inom vården och som gör ett individuellt projektarbete med fokus på AI-baserade beslutsstöd inom bildmedicin i samarbete med tekniska innovatörer. AIDAs tekniska fellows jobbar precis som de kliniska men är istället ingenjörer eller datavetare som vill samarbeta med kliniska motparter. Nedan finns sammanfattningar av vad AIDAs fellows jobbar med.

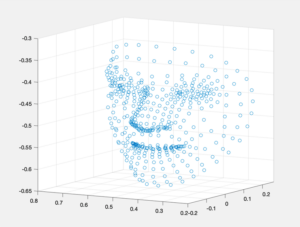

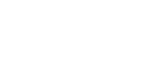

Longitudinal Assessment of Brain Metastases Tissue Volumes with Three-dimensional Convolutional Neural Networks

Maria Correia de Verdier

Uppsala University

Brain metastases are the most common central nervous system tumors. Advances in magnetic resonance imaging (MRI) have resulted in more sensitive, high-resolution sequences, leading to better detection of metastases. Coupled with increased efficacy of therapies, patients are living longer, and are therefore at an increased risk of developing new brain metastases over their lifetime. Detecting and characterizing longitudinal changes in brain metastases on MRI is a particularly time-sensitive task requiring high sensitivity and specificity.

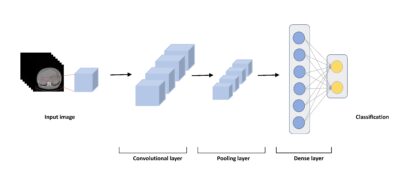

Automated detection, quantification, and longitudinal assessment of brain metastases represent an excellent opportunity to translate novel deep learning methods into clinical practice. The goal of this project is to develop and validate a convolutional neural network (CNN) for the analysis of brain MRIs in patients with brain metastases, which will distinguish among new/growing, stable, and shrinking metastases. The ultimate aim of this research is to decrease human bias, increase workflow efficiency, and improve patient outcomes by incorporating advances in computational performance and artificial intelligence (AI) into everyday radiology practice. The integration of AI tools into clinical workflows is expected to result in more rapid and precise quantitative assessments of disease burden, ultimately enabling more accurate and efficient diagnosis and treatment.

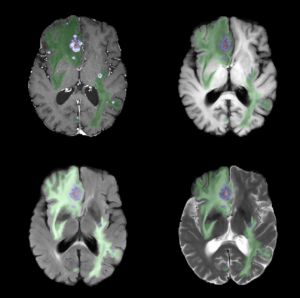

Vertebral fracture detection, improvements of detection and clinical workflow by an AI application

Mischa Woisetschläger

Region Östergötland

Osteoporosis (skeletal failure) is a serious condition that often goes undiagnosed and untreated, leaving many patients without proper fracture prevention. Osteoporotic fractures are frequent, expensive, and associated with high morbidity and mortality. Closing the gap between disease prevalence and the lack of identification and treatment of these patients is a top priority in several national guidelines. One challenge, both in Sweden and globally, is the missed diagnosis and management of osteoporotic vertebral fractures in CT thorax/abdomen performed for other reasons (opportunistic screening). Automatic detection of vertebral fractures on routine CT scans could play a vital role in improving the care of this patient group.

In this clinical project, we have assessed various AI-supported tools for detecting vertebral fractures. This included the validation of Flamingo, a product from Image Biopsy Lab (IBL), which has now received CE marking. We are now moving to the next phase, which involves integrating and evaluating the tool into radiology workflow and connecting it to the fracture liaison service.

AI for Kidney Pathology

Robin Ebbestad

Karolinska Institutet

Glomerulonephritis is the most common cause of kidney failure in Sweden. As a group, glomerulonephritis encompasses several different conditions spanning from childhood diseases to those affecting the elderly. The underlying cause also varies significantly. It includes, among others, autoimmune and genetic causes. This variety in the underlying cause has made it very difficult to develop new effective treatments leaving patients with few options. For the options available, too little guidance is available for treatment decisions with guidelines often focusing on broad markers of severity due to the current lack of individualized biomarkers. Personalized diagnostic tools are therefore urgently needed to improve the care for patients with glomerular kidney disease.

In our group, we have developed new microscopy techniques that can visualize the nanoscale structures of the glomerulus in 3D. By combining deep-learning based image analysis and 3D microscopy we aim to uncover new disease signatures that can predict treatment response and patient outcomes. Ultimately, this three-dimensional AI-assessment would provide support for individualized treatment decisions and serve to establish precision medicine for kidney diseases.

Voice and face analysis to diagnose acromegaly

Konstantina Vouzouneraki

Umeå University

Acromegaly is a rare disease leading to enlargement of several organs, including facial and voice changes and diseases leading to premature death. The rarity and slow progression of acromegaly hamper the diagnostic process resulting in a diagnostic delay of 5-8 years from onset of symptoms. An image analysis system for subtle acromegaly facial changes may be a valuable diagnostic tool. We aim to analyze voice and face recordings from 140 Swedish acromegaly patients and 140 matched controls, investigating the use of pre-trained neural networks on face data, as well as the use of different voice parameters, to identify acromegaly. Our vision is an easily accessible software where physicians or even patients may upload voice recordings, photos and symptoms to calculate the probability for acromegaly and advise on further investigations. A multimodal diagnostic tool for early identification of acromegalic changes of speech/voice and facial features has potential to reduce diagnostic delay, reduce patient suffering, morbidity and mortality.

Predicting tumor progression after portal vein embolization in liver cancer patients

Qiang Wang

Karolinska Institutet

Portal vein embolization has been regarded as a standard procedure to increase the future liver remnant size before extensive liver resection for patients with liver cancer. However, approximately 30% patients cannot proceed to the scheduled liver resection due to tumor progression or insufficient future liver remnant growth in the waiting period after portal vein embolization (so-called “futile portal vein embolization”). It is of clinical importance to identify patients who are at a high risk of futile portal vein embolization when making a treatment plan. The aim of this study is to develop and externally validate a deep learning model based on pretreatment computed tomography for predicting tumor progression after portal vein embolization. Patients with liver cancer who underwent portal vein embolization at two Swedish medical centers will be used as model development cohorts, while another center will serve as an independent validation cohort to test the model. A 3D convolutional neural network algorithm will be applied for modelling. We assume that the deep learning model developed in this study can help avoid the futile procedure and identify patients who will benefit from the portal vein embolization.

Online adaptive radiotherapy for head and neck cancer

André Änghede Haraldsson

Skåne University Hospital

Conventional radiotherapy begins with a reference image in the form of a computer tomography (CT) taken in treatment position. Relevant organs and tumour intended for radiotherapy (RT) is delineated manually by a physician. This is followed by dose planning and optimisation of the treatment. The time from reference CT to the start of treatment usually is 4-10 days. From reference CT to treatment, between treatment fractions, and progressively during the treatment, the anatomy of the patient change, sometimes to the degree that the procedure needs to be redone from the start of reference CT. Adaptive radiotherapy (ART) uses a suite of technologies in synergy to achieve treatment plans based on the patient’s daily position, anatomy, and treatment progress.

Our project will use the state-of-the-art and stand-alone dose planning system RayStation for online adaptive radiotherapy on a Radixact helical tomotherapy system initially focused on head and neck cancer. The clinical benefits are considerable, but the challenge to find feasible solutions in a clinical workflow is also large. We will focus on deep learning applications on image reconstruction for improved image quality, followed by applications of AI in dose-response assessment on longitudinal data.

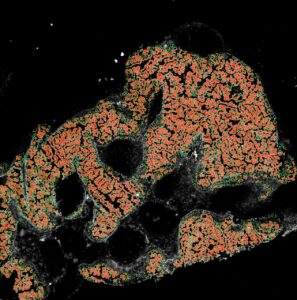

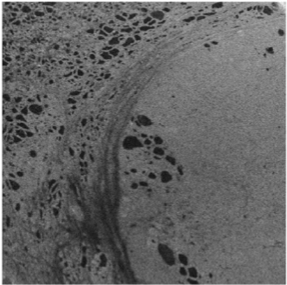

Super-resolution optical kidney pathology for clinical applications

Robin Ebbestad

Karolinska Institutet

The glomeruli of the kidney filters 180 liters of blood each day through a specialized multilayered structure called the glomerular filtration barrier. Glomerulonephritis, a heterogeneous group of autoimmune and inflammatory diseases in the glomeruli, is the leading cause of end stage kidney disease in Sweden. To diagnose this condition, pathology assessment of a kidney biopsy sample is required. Current diagnostics in kidney pathology is limited to what a trained nephropathologist can assess qualitatively on thin sections of kidney biopsy using a combination of histological stains, immunofluorescence and electron microscopy that have been largely unchanged for decades.

Our novel method combines immunofluorescent labelling of disease specific structures with the latest technology in sample preparation and super resolution optical microscopy, allowing for three-dimensional nanoscale visualization of the glomerular filtration barrier in health and disease.

With the application of deep learning methods we aim to improve kidney research and diagnostics by identifying novel morphological alterations responsible for kidney disease, new therapeutic pathways and to provide a fully automated quantitative nephropathological assessment.

Ultimately, the three-dimensional AI-assessment will provide support for individualized treatment decisions and contribute to establish precision medicine for kidney diseases.

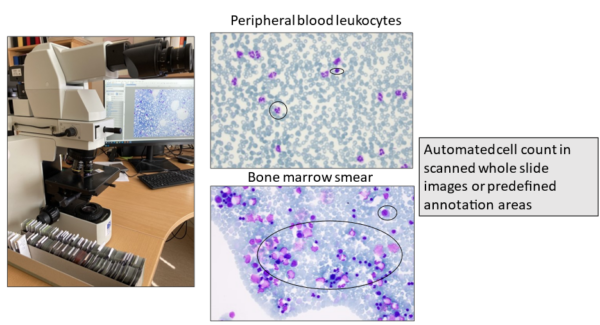

Image-based AI for assessment of peripheral blood and bone marrow aspirate smears

Leonie Saft

Karolinska Institutet

The cytomorphological examination of peripheral blood (PB) and bone marrow (BM) aspirate smears or imprints using light microscopy plays a central role in the routine diagnostic work-up of hematological diseases. Since the WHO classification relies on percentages of immature precursor cells (blasts) and other specific cell subsets to categorize certain entities, the cytomorphological assessment is the current gold standard for diagnosis and follow-up of hematologic disorders. However, the manual cell count is labor-intensive, time-consuming and prone to intra- and interobserver variation, even among experts. The aim of this project is to develop image-based AI tools that assist with the quantitative and qualitative evaluation of PB and BM smears for implementation in routine diagnostic hematopathology. Specific tasks are to establish standards for an automated blast count and to develop AI tools for the identification and quantification of dysplasia in hematopoietic cells. Digital pathology is already widely used in many laboratories and automated reporting can be integrated in the pathology report.

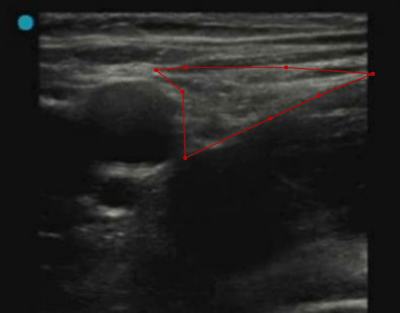

AI support for managing femoralis nerve blockage in emergency hip patients

Johan Berggreen

Lund University

Every year, 18,000 patients, usually elderly, suffer from a hip fracture in Sweden. The most common acute intervention is pain relief, which in the case of acute pain from a hip fracture can pose a major challenge to healthcare professionals.

General pain relief with drugs that affect the whole body is most common, e.g. opiates, while regional blockades of specific nerves is used to a lesser extent. An advantage of regional blockade is that an injection of local anesthesia around the nerve that supplies the injured part of the body can provide adequate pain relief without the potential serious side effects seen in central pain relief. Elderly people with high comorbidity are a patient group where these side effects can pose a high risk.

Ultrasound-guided nerve blocks require extensive experience and are primarily used by senior physicians in surgery and postoperative care. AIDA and the clinical fellowship support our endeavor to make the method more accessible by using Artificial Intelligence (AI). AI is set to provide visual support in real time to the user while applying the nerve blockade. The long-term vision for the project is to include AI support in portable ultrasound machines, suitable for use in prehospital care

Traumatic Brain Injury lesion segmentation in Computed Tomography

Jennifer Ståhle

Karolinska University Hospital

Traumatic Brain Injury (TBI) is the leading cause of death and disability in people <40yrs worldwide. Standard imaging at admission is non-contrast CT due to its wide availability and low acquisition time. The initial TBI results from the mechanical forces of impact leading to focal or diffuse brain injury patterns. Following the days after trauma a complex cascade of intracranial events can result in secondary brain injury with further brain damage.

Immediate and accurate interpretation of the CT images is crucial to assess the need for neurosurgical treatment or predict potential secondary injuries. While initial assessment is performed in settings ranging from Level 1 Trauma centers to more remote ER locations, sometimes with no available radiologist, interpretation has considerable inter-observer variability. Development of Machine Learning algorithms provide crucial steps in reducing error and refining diagnosis in the trauma setting, leading to improved outcome for patients suffering from TBI.

With experience from neuroradiology, neurosurgery and computer science the project aims to develop AI methods to automatically segment traumatic lesions in CT images. Efforts will be made to identify lesion injury patterns that may improve diagnosis, even for the experienced radiologist. If successful, our results can contribute to develop future diagnostic support tools to be tested in a clinical setting.

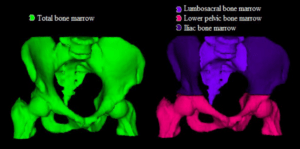

Convolutional neural networks for semantic segmentation of organs at risk in radiation therapy planning

Michael Lempart

Region Skåne

The segmentation of organs at risk (OAR) and target volumes plays a major role in external beam radiation therapy (EBRT). Delineation of anatomical structures and their boundaries is usually performed manually by clinical experts, such as oncologists or medical physicists. The segmented volumes are then used to compute a radiation dose. The overall segmentation process can be very complex, and the delineation accuracy is crucial for treatment outcome.

This project aims to evaluate the use of convolutional neural networks (CNN) for supervised semantic segmentation of OAR structures in EBRT. Two CT datasets with delineated structures in the pelvis region will be available, including around 170 patients each. An AI-based model would be of great value to accelerate the segmentation process performed by an oncologist, to increase the overall segmentation quality and to decrease delineation variations. Furthermore, a model that is able to generate accurate segmentations of structures in the pelvis region, might be used for several different patient groups or to generate more accurate models for predicting radiation toxicity.

The project will be performed at the department of radiation physics (Skåne University Hospital) in close co-operation with the mathematical imaging group at the centre for mathematical sciences, LTH, Lund.

Deep learning for thyroid pathology classification using 3D-OCT

Neda Haj Hosseini

Linköping University

Optical coherence tomography (OCT) is used in the clinical routine within ophthalmology and cardiovascular imaging with high potentials for other medical applications. Within this project, OCT is used for the end purpose of intraoperative surgical guidance and development of methods that can be used by surgeons for a quick and preliminary assessment of tissue abnormality to avoid unnecessary tissue removal and by pathologists to get a view of the tissue volume before preparing the samples for microscopy analysis.

The project aims at implementing deep learning on 3D-OCT images for automatic analysis and classification of diseased thyroid tissue versus healthy tissue. The OCT images are highly promising in showing the tissue microstructure in the thyroid organ, however, they are challenging to segment due to the imposed speckle noise in the scans and the depth dependent signal strength. Deep learning methods can improve the image analysis and tissue classification.

Constructing a deep neural network for choosing suitable CT protocols

Henrik Andersson

Skånes Universitetssjukhus, Region Skåne

Upon receiving a referral for an emergency computer tomography (CT) exam, it is up to the radiologist to choose a suitable CT protocol. During a typical day on call, this task takes up a considerable amount of time as well as repeatedly breaking up the workflow of the radiologist, resulting in reduced effectiveness and an increased risk of errors in simultaneously performed tasks, such as interpretation of radiological exams.

Deciding and choosing CT protocols for each individual patient is based on the specific clinical circumstances, including the patient’s symptoms, clinical findings, comorbidities, age and renal function. Since most of this information is provided in free text in the referral, automated selection is currently not available.

The aim of this project is to build, train and evaluate a deep neural network that, in the emergency setting, can choose a suitable CT protocol based on the referral text. In addition, such a deep neural network should be well suited for upscaling to include non-emergency CT-exams, in both in- and outpatients, as well as other modalities such as MRI and ultrasound.

Neuroradiology data to improve Data Hub functionality

Helene van Ettinger-Veenstra

Karolinska Institutet

The project aims to develop a deep neural network to classify chronic widespread pain with the use of structural MR images. Furthermore, we will investigate whether in addition the utilization of functional MR images and clinical data may improve this classification. Chronic widespread pain such as fibromyalgia (FMS) causes great suffering, yet is difficult to treat, partly due to the unpredictability of treatment effectivity. As fibromyalgia likely is a heterogenous group, classification of subgroups may be key to better initial choice of treatment. A convoluted neural network (CNN) is suitable to handle input of structural and clinical data such as questionnaires, and leaves possibility for input of functional data, blood samples, etc. as well. The contribution of structural images on pain patients is a novel contribution to the current AIDA-fellowships, and the wealth of clinical data available from neuroradiology and psychology including fMRI-data and clinical variables has the potential of opening up new research and data-analysis initiatives and possibilities. The output of this project can be supportive in future diagnosis of chronic pain, but will also be capable of classifying subclasses of chronic pain, this information can then be utilized for investigations for treatment optimization.

Novel Automatic Methods for Detection and Verification of Positioning Markers in an MRI-Only Workflow for Prostate Cancer Radiation Therapy

Christian Jamtheim Gustafsson

Region Skåne

Radiation therapy offers treatment to prostate cancer patients by delivery of high radiation doses. For accurate dose delivery, gold fiducial markers are inserted in the prostate and used for prostate localization. CT-images are used to plan and calculate the radiation dose. The fiducials are easily visible in CT-images. MRI enables improved soft tissue visualization of the prostate and adjacent tissues compared to CT. CT is still needed for dose calculations. MRI- and CT-images are therefore used in combination for radiotherapy planning. However, the use of images from multiple image modalities requires image registration which can introduce uncertainties in the planning and thus contribute to a non-optimal treatment.

These uncertainties can be eliminated by basing the treatment planning solely on MRI-images, referred to as MRI-only. Exclusion of CT-examination also saves time for the patient and can reduce the hospital cost. An efficient MRI-only workflow requires the identification of fiducials to be performed with high accuracy and confidence in MRI-images, which is as a major challenge. Further, fiducial identification is a manual process and can be associated with significant inter-observer variation and considerable time requirement. For cost effective large scale MRI-only implementations these issues need to be resolved.

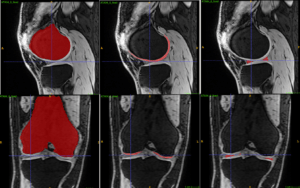

Integrating AI Based Image Segmentation into the Clinical Workflow of Knee Surgery Planning

Simone Bendazzoli

Novamia AB

The aim of this project is to build a standalone software that integrates AI based segmentation methods with other image analysis tools. The software will support the clinical workflow for preoperative planning of knee surgery using high-resolution MRI images. The proposed solution is expected to reduce the amount of work needed to create personalized 3D models from patient’s MRI scans, generate quantitative measurements of the damaged parts and help the surgeon to better evaluate and plan the surgery. This process could take days for trained medical engineers to finish without the help of AI, but it could be shortened to less than an hour with the help of the advanced deep learning tools. The focus of this project will be to tailor the tools to support real clinical usage with easy deployment, user-friendly GUI and intuitive workflow in mind. The project will be conducted in collaboration with Dr. Chunliang Wang from KTH who is the PI of another AIDA project about simultaneous landmark detection and organ segmentation in medical images.

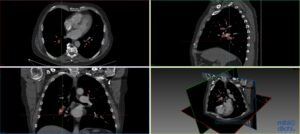

Collaboration on Automatic Detection of Pulmonary Emboli in CTPA Examinations

Dimitris Toumpanakis, MD

Uppsala University Hospital

Pulmonary embolism (PE) is a medical emergency in which blood clots travel to and occlude the pulmonary arteries. CT pulmonary angiography (CTPA) has become the gold standard imaging modality for the diagnosis of PE and is now one of the most common radiological examinations performed in the emergencysetting.

Today the interpretation of the CTPA is done manually by radiologists, which is time-consuming and influenced by the stressful conditions of emergency medical care. Several automatic PE detection systems have been developed but none with acceptable accuracy for clinical use.

This project aims to develop a deep neural network for fast and precise automatic identification of pulmonary emboli in CTPA examinations that will be suitable for use in the clinical setting. To achieve this, the efforts of our team focus on the application of deep learning techniques on a large set of structured and meticulously annotated CTPA examinations.

The resulting system will save time for radiologists and secure a timelier diagnosis and treatment for patients having PE, reducing the risk of a fatal event and leading to improved clinical outcomes. In addition, the resulting data, methods and experience will help to pave the way for other applications of automated medical imaging analysis in the field of thorax radiology and emergencydiagnostics.

AI for Prostate Cancer Screening

Patrick Masaba

Karolinska Universitetssjukhuset Solna

Prostate cancer is the male equivalence of female breast cancer with an incidence of 9,000 to 10,000 new cases and a mortality of 2,500 men each year in Sweden. For men, in contrast to women, no screening program is available.

The current diagnostic chain involves a blood analysis for prostate specific antigen (PSA), that if elevated leads to transrectal ultrasound-guided biopsies. The 10-12 biopsies are taken in a systematic way without knowledge about where the cancer, if present, is located and therefore misses up to 50% of the significant cancers that needs treatment.

New evidence shows that pre-biopsy magnetic resonance imaging (MRI) of the prostate, followed by targeted biopsies towards tumor suspicious areas is superior to the current diagnostic chain. Pre-biopsy MRI will be recommended in the recently revised version of the Swedish National guidelines. This will lead to a great increase in number of MRI examinations and consequently the workload for the radiologists.

Our project aims to combine knowledge from radiology, urology, epidemiology, statistics and computer science to develop an AI based digital tool that could independently diagnose and screen MRI images for potential signs of prostate cancer, rapidly shortening the time it takes to make a diagnosis and start treatment.

If successful our tool would have an immense impact on the increased demand on the medical workforce to diagnose and treat prostate cancer. Rapid real-time screening and diagnosis would be feasible and could lead to a national screening program for men. It would also contribute to the expansion and shift to smart algorithms use on the national medical stage.

Annotated Mammography Database for Testing and Developing AI Applications

Victor Dahlblom

Skånes universitetssjukhus, Region Skåne

Breast cancer is the most common type of cancer in women. In an attempt to find the disease as early as possible in order to reduce breast cancer mortality, large efforts have been made by establishment of mammography screening programs. Despite screening, a significant amount of cancer cases still is discovered through clinical symptoms between screenings. Computer aided detection based on artificial intelligence (AI) can be a way to make the screening more efficient both in means of resources and results. To develop and test such systems, access to large databases of annotated cases is necessary.

This project aims to build up a database of mammograms from the Malmö Breast Tomosynthesis Screening Trial, MBTST, to make the material accessible for testing different AI-based analysis tools. The MBTST is a breast cancer screening trial which includes digital mammograms and breast tomosynthesis examinations from 15,000 women with established ground truth. The planned future step will be to build a large database of all mammograms from 2004 and onwards in Malmö.

By the use of AI systems to assist the human reader in mammography screening, we foresee that more breast cancers may be discovered in time and the reading could be less time consuming which in the long run makes the screening program more effective.

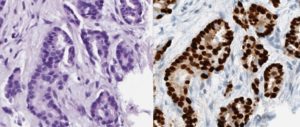

AI-driven Image Analysis in Digital Pathology

Gordan Maras

Klinisk patologi och cytologi, Region Gävleborg

Digital pathology has been gaining attention from both clinical pathologists and technical developers over the last years. One of the interesting possibilities with the digitization of pathology is the use of image analysis. Parallel to the development of digital pathology there have also been an expansion of more advanced technology. An area that has been especially eye-catching is artificial intelligence (AI). The application of AI in digital pathology could potentially create powerful and versatile tools for use in the clinical setting. These could for instance be used for automatic calculation of specific cells or recognition of tumor cells within a lymph node.

In the upcoming project, it would be interesting to investigate the efficiency and the accuracy of AI-driven image analysis when it comes to counting specially stained cells (i.e. hormone positive cells). In addition, it would also be intriguing to look at the speed of the calculations and how these factors may be improved.

These types of tools for the digital pathology would allow for more distinct reporting to various physicians for a better decision making basis which in turn should provide a better and more effective patient care.

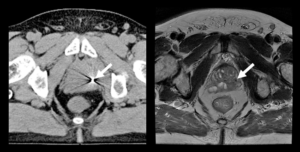

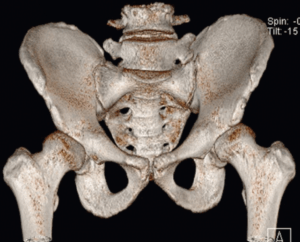

Collaboration on Simultaneous Landmark Detection and Organ Segmentation in Medical Images Using Multi-Task Deep Neural Network

Bryan Connoly

Karolinska sjukhuset Huddinge

Analysis of anatomy is an essential task carried out on all medical images. It can be time consuming and cumbersome, thereby taking time which could be better spent analyzing pathology or planning for treatment. This project aims to develop a multi-task deep neural network (DNN) for the detection of key anatomical structures on radiological images. It will also extract regions of interest (ROI) for further image analysis. This will be applied to orthopedic applications, specifically on 3D CT images of the hip, in preparation for surgery. This system will automatically label the bones, important landmarks, organs and ROIs. It will be able to learn with each image it processes and ultimately lead to improved accuracy as the number of images it analyses increases. This will save time for radiologists and surgeons, and will lead to more accurate diagnostics. This in turn will lead to reduced risk and improved quality of care and outcomes for orthopedic patients. This automated process will help to pave the way for other applications in orthopedics, but also in every part of the body, thus carrying out a step towards improved patient care using the help of computer analysis.

Automatic Detection of Lung Emboli in CTPA Examinations

Tomas Fröding

Nyköping Hospital

Pulmonary embolism (PE) is a serious condition in which blood clots travel to, and occlude, the pulmonary arteries. To diagnose or exclude PE, radiologists perform CT pulmonary angiographies (CTPA). Today the interpretation of the CTPA is done manually by a radiologist, which is time-consuming and dependent on human factors, especially in the stressful conditions of emergency medical care. Several automatic PE detection systems have been developed, but none with acceptable accuracy for clinical usage. A general limitation in these previous efforts has been lack of expert annotated CTPAs for training and validation of the systems.

This clinical fellowship at AIDA will support the efforts of our team to develop a system for fast and precise automatic identification of pulmonary embolization in CTPA examinations. It will

to a considerable extent be devoted to structured and detailed annotation of a large set of CTPA examinations, aiming at removing a main obstacle to apply deep learning techniques in medical image analysis.

The resulting system will save precious time of both patients suspected of having PE and of radiologists during their daily clinical routines. This will, in turn, have a strong positive impact on the health system in Sweden, considering that CTPA is today one of the most common emergency CT examinations in the country.

AIDA MENY

KONTAKT

KALENDARIUM

MER INFORMATION

AIDA har sin fysiska bas på Centrum för medicinsk bildvetenskap och visualisering, CMIV, vid Linköpings universitet.CMIV har mångårig erfarenhet av att jobba med teknikutmaningar inom bildmedicin och att föra dess in i klinisk verksamhet. CMIV är också internationellt erkänt för sin kliniknära och tvärvetenskapliga spetsforskning inom bild- och funktionsmedicin, med verksamhet inom avbildning, bildanalys och visualisering. Du kan läsa mer om AIDA och CMIV på liu.se/forskning/aida